I like interacting with interesting people. This is my personal blog, but if you want to chat, I’m active on Twitter

What does it mean when a computer can write about our problems better than we can?

People have been talking a lot about GPT-3, but more as a novelty than a tool (don’t know what GPT-3 is? look here). Some clever people have even figured out how to get it to generate code from descriptions. Yet, I think that the best use cases lie outside of tech.

I believe that GPT-3 has the potential to change the way we write. But I can’t just tell that to people, most of them won’t believe it. People need at least some proof before they begin to take these things seriously.

So I made proof.

Over the last two weeks, I’ve been promoting a blog written by GPT-3.

I would write the title and introduction, add a photo, and let GPT-3 do the rest. The blog has had over 26 thousand visitors, and we now have about 60 loyal subscribers...

And only ONE PERSON has noticed it was written by GPT-3.

People talk about how GPT-3 often writes incoherently and irrationally. But, that doesn’t keep people from reading it… and liking it.

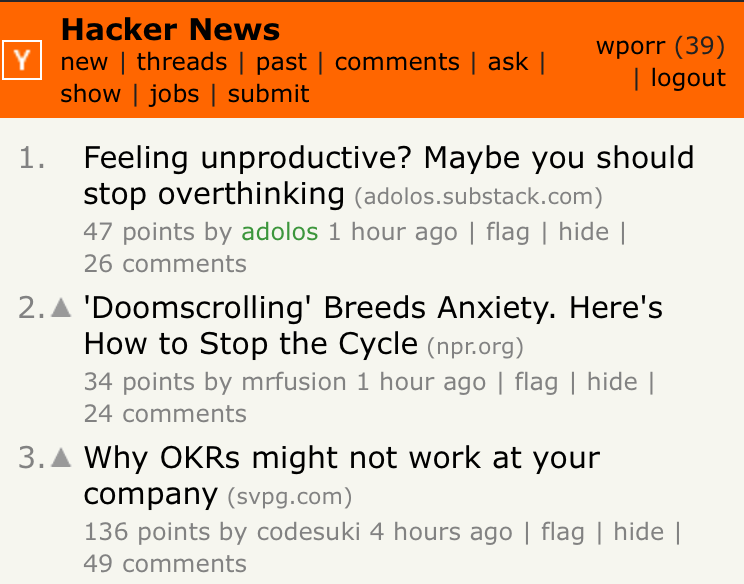

In fact, the very first post made it to the number one spot on Hacker News.

Manuel Araoz had done something similar with his article, but his approach was different. People were surprised when he revealed the text was written by GPT-3. It was his revealing that made the article interesting, otherwise, it’s just a crappy piece of writing (what GPT-3 wrote, the rest is quite good).

In this post, not only did people not realize they were reading generated text, but they enjoyed the writing so much that they voted it above dozens of other human-written articles.

I must confess, I actually lied earlier when I told you only one person noticed. Only one person reached out to me to ask if GPT-3 was writing the articles. However, there were a few commenters on hacker news who also guessed it. Funny enough, nobody took notice because the community downvoted the comments.

I felt a little bad considering that it really was written by GPT-3, but it’s still pretty funny.

Regardless, I think this is enough to prove that the concept works. So, what does it mean?

The future of online media

The biggest benefit of GPT-3 is efficiency. All I need to write is a good title and intro. I could write five of them in an hour and publish them all in one day if I wanted to.

In other words, one good writer with GPT-3 can now do the same work that took a team of content creators before.

If you read some of the content I made, you may not be convinced about its quality. Indeed, there are traces of illogic, difficulty with staying on topic, issues with repetition, etc. I chose to leave the content as unedited as possible [1] for the purposes of this experiment.

However, with very minor editing, you could make these decent. Cut out irrelevant stuff, write a conclusion, and boom - people don't stand a chance of telling the difference.

So, what does this mean for online content going forward? Well, I’m not entirely sure.

For facts based content producers, you’re (probably) safe. There’s a reason I chose a self-help style theme for the blog. GPT-3 is great at creating beautiful language that touches emotion, not hard logic and rational thinking.

However, I think the real value here is not in blog posts, that was just to prove the concept. Its true value lies as a writing tool. The invention of GPT-3 for writers is like the invention of the McCormick reaper for farmers. It increases efficiency, and thus reduces labor costs, like any other technological innovation. The difference is, this is one of the first times technology has the potential to affect jobs in the creative sector.

For big media companies, this could mean huge savings. Again, for fact-based reporting you won’t be able to just let GPT-3 write the thing for you. As a supplementary tool, however, I think it could certainly speed up the process, saving them quite a bit of cash. Let’s do some napkin math, shall we?

Buzzfeed Inc. has 1700 employees with an average base salary for a writer being 42k USD according to glassdoor. I don’t know how many of those employees are writers (if you do, message me), but I’m going to guess it’s a few hundred. For our purposes, let's say 400 writers. As another simplification, let’s assume annual revenue is tied to the number of articles produced (i.e. as long as the number of articles produced stays the same, annual revenue should also remain the same). let's say for the kind of content on this website, GPT-3 can only increase efficiency by 50% (keep in mind for self-help style writing, speedup was over 5x for me, but I’ve also never worked in media, so I’m being conservative). Remaining even more conservative, let's say that only half of the writers at Buzzfeed can benefit from these efficiency gains.

This would mean that ~133 writers with GPT-3 can produce the same amount of content as 200 writers without it. If we viewed this in terms of labor costs, replacing the 200 person team with a 133 person, GPT-3 supported team would save Buzzfeed about 3 million dollars annually.

Honestly, unless GPT-3 ends up being a total flop for content production, I think this number could be much higher.

However, I don’t think Buzzfeed is going to start making their writers use GPT-3. I think their staff will be too resistant to it. They may be offended at the suggestion that a computer can write better than they can, or it cramps their style. But, given how much these companies are struggling during COVID, who knows what they’ll do.

This leaves room for a new kind of media company. One that’s fast and lean. The writing team will be small, but experts at bending GPT-3 to their will. It’s a 2006 Chevy Suburban vs a 2020 Tesla. You can't mod your suburban into a Tesla, they’re completely different models.

Final thoughts

I had a lot of fun doing this experiment. Some people will probably get mad at me for tricking people, but whatever. The whole point of releasing this in private beta is so the community can show OpenAI new use cases that they should either encourage or look out for.

Oh also, I don’t actually have access to GPT-3. I had to find a PhD student who was kind enough to help me out, but not so kind as to give me the API keys outright. So I’ve been having to send him prompts and adjust parameters manually over messages…

Sam Altman, if you’re reading this, can I please get access to GPT-3 so I can leave this poor PhD student alone?

Also, if you’re curious, here is how I revealed the joke to my subscribers.

[1] There were, on occasion, small changes that had to be changed or would have been dead giveaways. For instance, it once attributed a Bob Dylan quote to Emerson. There were also some simple grammatical errors.

Can you let it write headlines and introductions for future articles, therefore even reduce the ideas you have to come up with?

Could there also be a big unintended consequence that not many people seem to have thought about?

Hypothetically, say Buzzfeed or a new media company can churn out 5 times as much content with the same resource (or less). So that's 5 times as many pages competing for eyeballs in an environment with low advertising rates because people already have too much to consume in a day... So it's unlikely to increase their revenue longterm. Which is why so many media companies are already looking at alternatives (subscriptions, email newsletters, podcasts, eCommerce etc).

And then there's the unintended consequence of wide AI adoption.

If I pay for a book, magazine or newspaper, I'm not just buying the content. I'm buying the labour of the people involved, who have spent time learning their craft, and researching their stories. So it's a fair exchange for me to pay for a book by William Gibson, for example, because I know at the end of the financial chain some of the money will be going to a person who has invested time and effort into the creation of the product I've bought.

If the only human input is five minutes spent on a headline and intro, which will probably then just be spun into multiple variations for testing purposes, why would I assign a monetary value to it? There's no labour to be rewarded, and no bond with the writer (which is a big driver of me spending money on writing). Instead it's just some very clever AI churning out words which are meaningless to it.

Rather than saving content companies cash, it seems more likely to entirely devalue writing as medium, which has pretty big implications for the how humanity communicates in the future.